On September 10, 2025, Tyler Robinson allegedly shot and killed Charlie Kirk, leaving shell casings marked with internet-style jokes such as “If you read this, you are gay. LMAO” and “notices bulges OwO what’s this?”

In Buffalo on May 14, 2022, Peyton Gendron drove from Conklin to Buffalo and opened fire in a grocery store with a rifle engraved with phrases like “#BLM Mogged” and “We wuz kangz,” both tied to white supremacist meme culture on 4chan.

Back on June 8, 2017, Randy Stair murdered three co-workers in a supermarket after years of posting journals, videos, and tweets inspired by the Columbine shooters.

In June 2019, Ethan Neineker shared a meme of Hillary Clinton shooting Jeffrey Epstein, and six years later carried out a shooting that killed three people including a 4-year-old girl.

In May 2022, Uvalde, Texas, Salvador Ramos messaged a German teenager that he had just shot his grandmother in the face and was about to attack an elementary school, to which she skeptically replied “Cool.”

In March 2019, Brenton Tarrant released a meme-filled manifesto with ironic references like “Subscribe to PewDiePie” shortly before killing 51 people in two mosques in Christchurch, New Zealand.

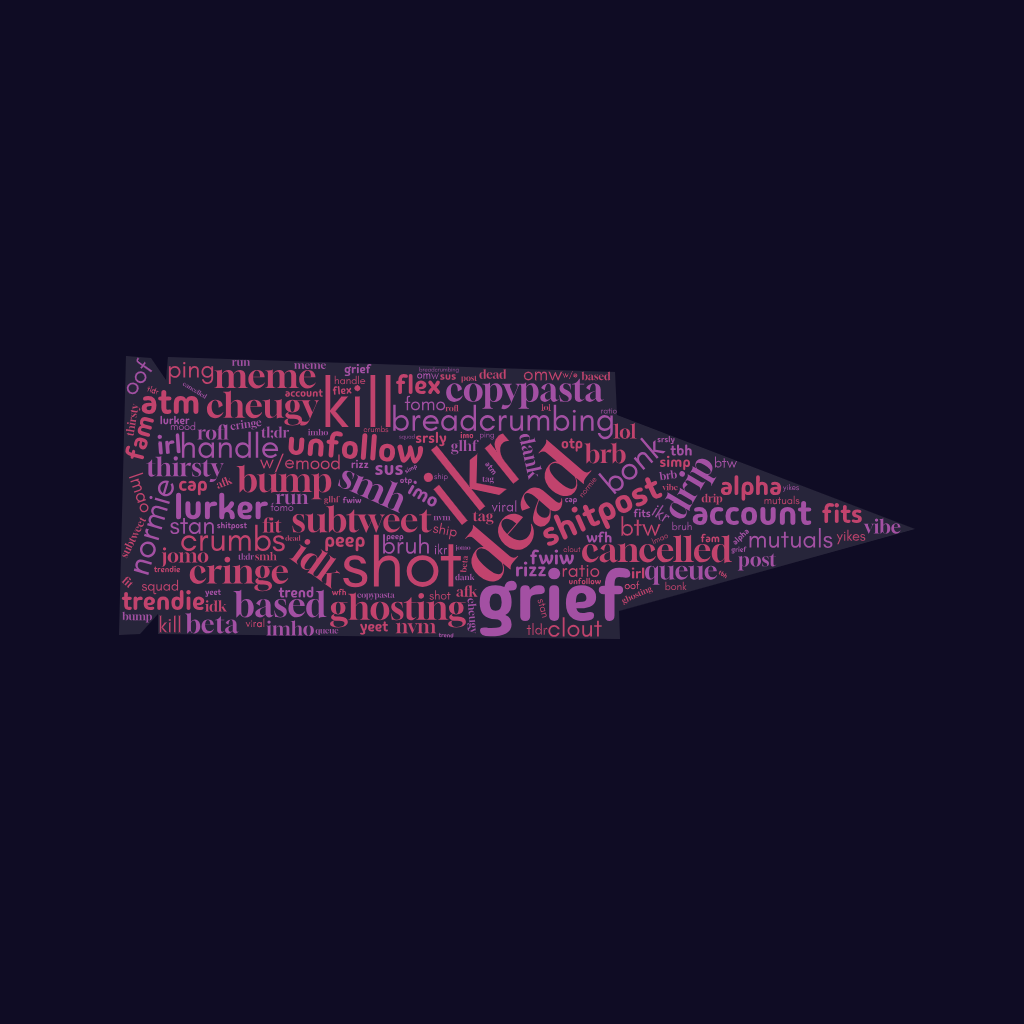

This is becoming a pattern. Memes, ironic jokes, and manifesto style have become breadcrumbs of a digital pipeline that turns grievance into killing.

Accelerationism is the belief that the fastest way to cause major political change is to speed up the forces already shaping society such as technology, capitalism, and social breakdown. There are nonviolent, academic versions, but also dangerous online strains. The latter celebrates accelerating collapse and sometimes looks to violence as a shortcut to radical change. Younger, more impressionable people drift from edgier corners of the internet into tighter communities, such as school shooter Nikolas Cruz’s group chat, where racist, misogynist, and accelerationist ideas are normalized, stitched into coherent justifications, and thrown into the real world.

Online recruitment or pull factors to these dark areas often begin as entertainment but gradually travel somewhere else. What looks like dark humor or “shitposting” functions as a filter. Jokes and coded language let users test loyalty without overt commitment; upvotes and status signal reward escalation. The Buffalo shooter used many numbers that held radical meanings behind them on his engravings, such as “13/52” on his shotgun. That number is a shorthand reference to racist propaganda claims by white supremacists against black people to depict them as criminal in nature. 13 refers to the supposed percentage of the U.S population that is black and the 52 being the alleged percentage of murders committed by black people. Another number on a barrel, “14” is most likely a reference to the most popular white supremacy quote of all time from David Lane, a member of the white supremacist terrorist group known as The Order.

The quote reads, “We must secure the existence of our people and a future for white children.”

Private DMs and invite-only servers move promising recruits into closed spaces where one-on-one mentoring and peer pressure harden perspectives. Platforms’ recommendation algorithms and cross-posting make the pipeline more efficient by surfacing sharper content to the eyes of curious people. These are all tools by which belief can become reality.

Solutions to this problem, however, are logistically and politically messy, and heavy handed monitoring risks trading one threat for another. Speedy takedowns, content sharing agreements and cross-platform intelligence can prevent ideas from spreading, but broad surveillance or public control would limit everyday speech and create tools that authoritarian states can repurpose.

China’s model of pervasive cameras, data aggression and AI-driven monitoring shows how surveillance can prevent protesting and control entire populations. We can stop these pipelines without delving into that madness by favoring a lot of judicial oversight and independent auditing of any automated systems used to flag threats. However, doing it all through humans is difficult. A video from VICE shows the dangers of manually reviewing content online through moderators, as it usually leads to desensitization to violence, and in many cases serious mental trauma.

But the key to being aware of this pipeline is knowing the human cost of these brutal events. It should be the moral center of coverage and policy. Naming attackers as done in this article and in headlines elevates them into the fame they sought, letting their hate live on through the internet’s ghostly highways. Letting their names fade while amplifying the victims, survivors, and communities preserves memory without contributing to the fear they built up.

Remember the wounded, the parents, the mornings lost and civic scars. Publish their names and their stories, fund support, and measure policy not in the number of arrests or bans, but in the number of families that don’t have to live with this grief.